Publications

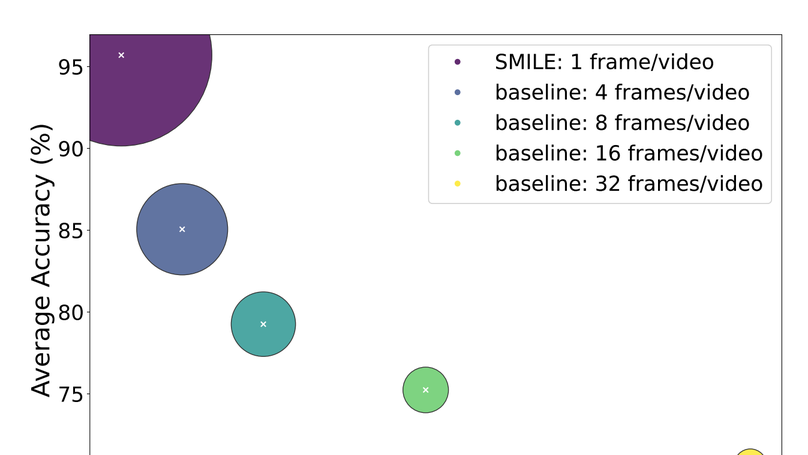

Class-incremental learning is one of the most important settings for the study of Continual Learning, as it closely resembles real-world application scenarios. With constrained memory sizes, catastrophic forgetting arises as the number of classes/tasks increases. Studying continual learning in the video domain poses even more challenges, as video data contains a large number of frames, which places a higher burden on the replay memory. The current common practice is to sub-sample frames from the video stream and store them in the replay memory. In this paper, we propose SMILE a novel replay mechanism for effective video continual learning based on individual/single frames. Through extensive experimentation, we show that under extreme memory constraints, video diversity plays a more significant role than temporal information. Therefore, our method focuses on learning from a small number of frames that represent a large number of unique videos. On three representative video datasets, Kinetics, UCF101, and ActivityNet, the proposed method achieves state-of-the-art performance, outperforming the previous state-of-the-art by up to 21.49%.

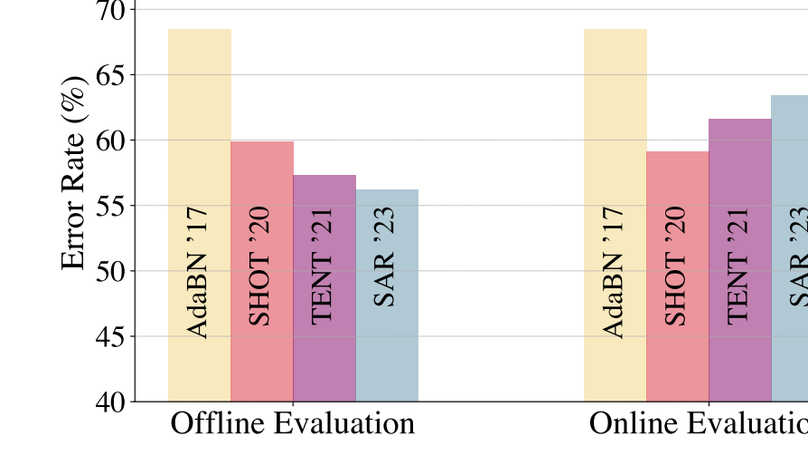

This paper proposes a novel online evaluation protocol for Test Time Adaptation (TTA) methods, which penalizes slower methods by providing them with fewer samples for adaptation. TTA methods leverage unlabeled data at test time to adapt to distribution shifts. Though many effective methods have been proposed, their impressive performance usually comes at the cost of significantly increased computation budgets. Current evaluation protocols overlook the effect of this extra computation cost, affecting their real-world applicability. To address this issue, we propose a more realistic evaluation protocol for TTA methods, where data is received in an online fashion from a constant-speed data stream, thereby accounting for the method’s adaptation speed. We apply our proposed protocol to benchmark several TTA methods on multiple datasets and scenarios. Extensive experiments shows that, when accounting for inference speed, simple and fast approaches can outperform more sophisticated but slower methods. For example, SHOT from 2020 outperforms the state-of-the-art method SAR from 2023 under our online setting. Our online evaluation protocol emphasizes the need for developing TTA methods that are efficient and applicable in realistic settings.

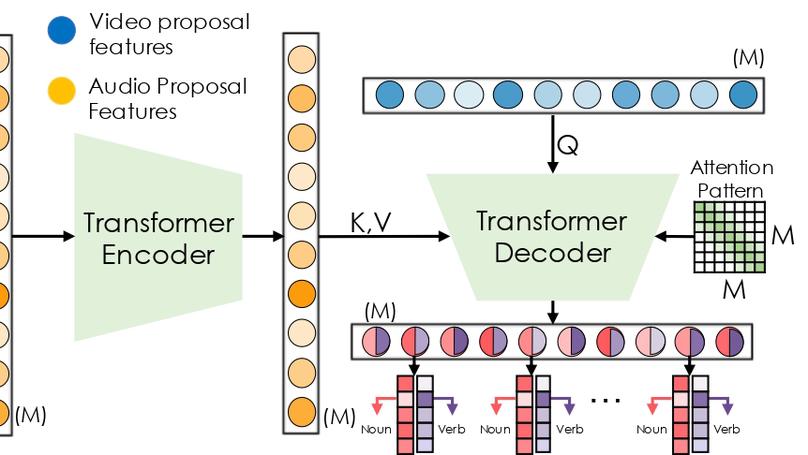

Egocentric videos capture sequences of human activities from a first-person perspective and can provide rich multi-modal signals. However, most current localization methods use third-person videos and only incorporate visual information. In this work, we take a deep look into the effectiveness of audiovisual context in detecting actions in egocentric videos and introduce a simple-yet-effective approach via Observing, Watching, and Listening (OWL). OWL leverages audiovisual information and context for egocentric Temporal Action Localization (TAL). We validate our approach in two large-scale datasets, EPIC-KITCHENS and HOMAGE. Extensive experiments demonstrate the relevance of the audiovisual temporal context. Namely, we boost the localization performance (mAP) over visual-only models by +2.23% and +3.35% in the above datasets.

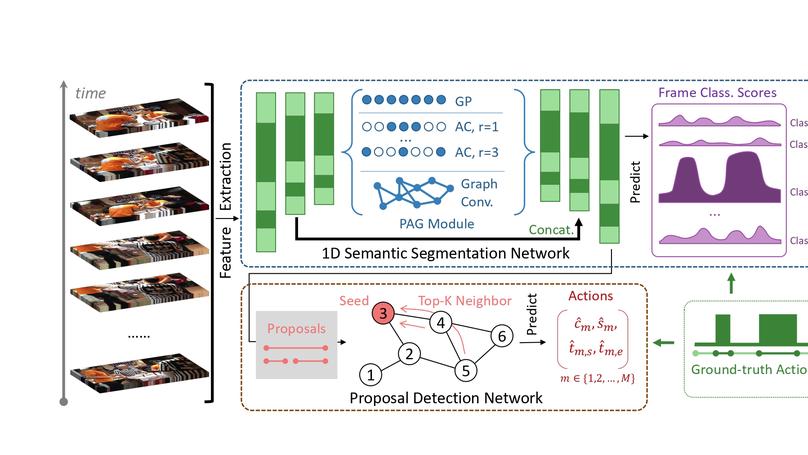

Temporal action detection (TAD) is an important yet challenging task in video analysis. Most existing works draw inspiration from image object detection and tend to reformulate it as a proposal generation - classification problem. However, there are two caveats with this paradigm. First, proposals are not equipped with annotated labels, which have to be empirically compiled, thus the information in the annotations is not necessarily precisely employed in the model training process. Second, there are large variations in the temporal scale of actions, and neglecting this fact may lead to deficient representation in the video features. To address these issues and precisely model TAD, we formulate the task in a novel perspective of semantic segmentation. Owing to the 1-dimensional property of TAD, we are able to convert the coarse-grained detection annotations to fine-grained semantic segmentation annotations for free

We introduce Ego4D, a massive-scale egocentric video dataset and benchmark suite. It offers 3,670 hours of daily-life activity video spanning hundreds of scenarios (household, outdoor, workplace, leisure, etc.) captured by 931 unique camera wearers from 74 worldwide locations and 9 different countries. The approach to collection is designed to uphold rigorous privacy and ethics standards, with consenting participants and robust de-identification procedures where relevant. Ego4D dramatically expands the volume of diverse egocentric video footage publicly available to the research community. Portions of the video are accompanied by audio, 3D meshes of the environment, eye gaze, stereo, and/or synchronized videos from multiple egocentric cameras at the same event. Furthermore, we present a host of new benchmark challenges centered around understanding the first-person visual experience in the past (querying an episodic memory), present (analyzing hand-object manipulation, audio-visual conversation, and social interactions), and future (forecasting activities). By publicly sharing this massive annotated dataset and benchmark suite, we aim to push the frontier of first-person perception.

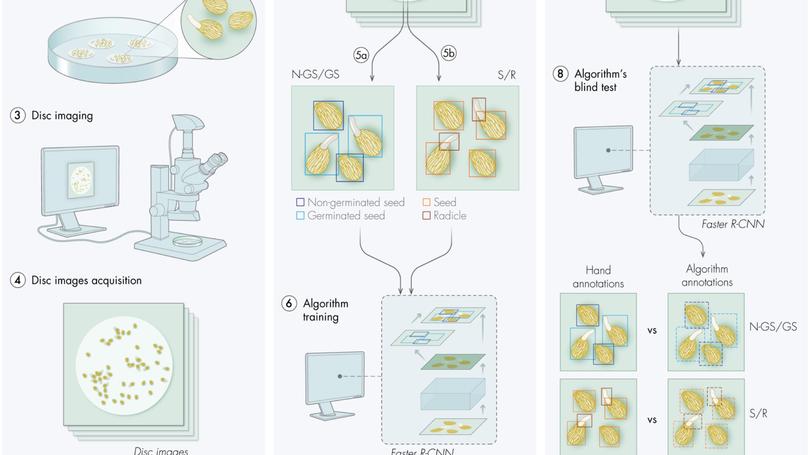

Witchweeds and broomrapes are root parasitic weeds that represent one of the main threats to global food security. By drastically reducing host crops’ yield, the parasites are often responsible for enormous economic losses estimated in billions of dollars annually. Parasitic plants rely on a chemical cue in the rhizosphere, indicating the presence of a host plant in proximity. Using this host dependency, research in parasitic plants focuses on understanding the necessary triggers for parasitic seeds germination, to either reduce their germination in presence of crops or provoke germination without hosts (i.e. suicidal germination). For this purpose, a number of synthetic analogs and inhibitors have been developed and their biological activities studied on parasitic plants around the world using various protocols. Current studies are using germination-based bioassays, where pre-conditioned parasitic seeds are placed in the presence of a chemical or plant root exudates, from which the germination ratio is assessed. Although these protocols are very sensitive at the chemical level, the germination rate recording is time consuming, represents a challenging task for researchers, and could easily be sped up leveraging automated seeds detection algorithms. In order to accelerate such protocols, we propose an automatic seed censing tool using computer vision latest development. We use a deep learning approach for object detection with the algorithm Faster R-CNN to count and discriminate germinated from non-germinated seeds. Our method has shown an accuracy of 95% in counting seeds on completely new images, and reduces the counting time by a significant margin, from 5 min to a fraction of second per image. We believe our proposed software 5 “SeedQuant” will be of great help for lab bioassays to perform large scale chemicals screening for parasitic seeds applications.